Zero to Kubernetes on Azure

Introduction

Kubernetes is a highly popular container management platform. If you have just heard about it but didn’t have a chance to play with it then this post might help you to get started.

In this guide, we will create a single-node kubernetes cluster and will deploy a sample application into our cluster from our private container registry, and finally, we are going to configure our cluster to be able to serve our content with TLS certificate from a custom domain!

If this sounds interesting, then buckle up because this post is going to be really looooong post!

Prerequisites

Before we start, make sure that you have an active Visual Studio Subscription.

While we are creating and configuring a cluster we will make use of a couple of tools.

- Docker We will need a local docker installation to be able to build Docker images locally.

- Azure CLI is a suite of command-line tools that we are going to use heavily to manage our Azure resources.

Once you have installed it, make sure you are logged in (

az login) and your Visual Studio subscription is the active one. - kubectl is a command-line tool that we will use to manage our kubernetes cluster.

- helm is a command-line tool that we will use to manage deployments to our kubernetes cluster.

Create the Kubernetes Cluster

OK, let’s get started.

First, we need to create a resource group which will contain all the resources that we are going to create later on.

Z:\>az group create -n kube-demo-group --location=westus2

{

"id": "/subscriptions/<redacted>/resourceGroups/kube-demo-group",

"location": "westus2",

"managedBy": null,

"name": "kube-demo-group",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": null

}Next, we are going to create a single-node kubernetes cluster.

NOTE: I have chosen to create a single-node cluster purely because the cost of a multi-node cluster would exceed monthly Visual Studio Subscription credit. If you are not planning to run the cluster for a month then feel free to increase the node count in the previous command.

az aks create -n kube-demo --resource-group kube-demo-group --node-count 1 --node-vm-size Standard_B2msCreating a kubernetes cluster might easily take a while. You should see a JSON formatted cluster information printed to the console when the operation is completed.

{

"aadProfile": null,

"addonProfiles": null,

"agentPoolProfiles": [

{

"count": 1,

"maxPods": 110,

"name": "nodepool1",

"osDiskSizeGb": 100,

"osType": "Linux",

"storageProfile": "ManagedDisks",

"vmSize": "Standard_B2ms",

"vnetSubnetId": null

}

],

"dnsPrefix": "kube-demo-kube-demo-group-b86a0f",

"enableRbac": true,

"fqdn": "kube-demo-kube-demo-group-b86a0f-5107b82b.hcp.westus2.azmk8s.io",

"id": "/subscriptions/[redacted]/resourcegroups/kube-demo-group/providers/Microsoft.ContainerService/managedClusters/kube-demo",

"kubernetesVersion": "1.11.9",

"linuxProfile": {

"adminUsername": "azureuser",

"ssh": {

"publicKeys": [redacted]

}

},

"location": "westus2",

"name": "kube-demo",

"networkProfile": {

"dnsServiceIp": "10.0.0.10",

"dockerBridgeCidr": "172.17.0.1/16",

"networkPlugin": "kubenet",

"networkPolicy": null,

"podCidr": "10.244.0.0/16",

"serviceCidr": "10.0.0.0/16"

},

"nodeResourceGroup": "MC_kube-demo-group_kube-demo_westus2",

"provisioningState": "Succeeded",

"resourceGroup": "kube-demo-group",

"servicePrincipalProfile": { clientId: <redacted> },

"tags": null,

"type": "Microsoft.ContainerService/ManagedClusters"

}NOTE: If you see an error message saying An RSA key file or key value must be supplied to SSH Key Value. You can use –generate-ssh-keys to let CLI generate one for you, then try appending

--generate-ssh-keysoption to the end of the previous command and run it again.

From now on, we are going to use kubectl CLI to interact with our cluster. kubectl can be used to work with multiple kubernetes cluster. Authentication information for each cluster is called context.

We need to provide context information of our cluster so that the commands we run points to our cluster.

We are going to use Azure CLI to handle pulling the context information of cluster and merge it with kubectl configuration.

Z:\>az aks get-credentials -g kube-demo-group -n kube-demo

Merged "kube-demo" as current context in C:\Users\Ibrahim.Dursun\.kube\configContext information for our cluster is called kube-demo. Let’s make sure that it is the default context.

Z:\>kubectl config use-context kube-demo

Switched to context "kube-demo".Awesome! Now, every command we are going to run using kubectl is going to be executed in our kubernetes cluster.

Let’s request the list of active nodes in our cluster.

Z:\>kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-27011079-0 Ready agent 12m v1.11.9

Create an Azure Container Registry (ACR)

We have created a kubernetes cluster, but it is pretty useless as it’s own because we haven’t deployed anything to it.

Ideally, we would like to deploy containers from our own application images into our cluster.

One way of doing it is to push our application’s docker image to public hub.docker.com under our user name but this will make it publicly accessible. If this is not something you would like the alternative is to create a private container registry.

A private container registry on Azure is called Azure Container Registry (ACR).

The following command creates an ACR resource with name kubeDockerRegistry on Azure. The full address of the container registry will be kubedockerregistry.azurecr.io.

NOTE: The name of the ACR needs to be unique. If the name is taken, an error message will be printed. Don’t forget to replace ACR name in the subsequent commands with the name you have chosen.

Z:\>az acr create --resource-group kube-demo-group --name kubeDockerRegistry --sku Basic

{

"adminUserEnabled": false,

"id": "/subscriptions/<redacted>/resourceGroups/kube-demo-group/providers/Microsoft.ContainerRegistry/registries/kubeDockerRegistry",

"location": "westus2",

"loginServer": "kubedockerregistry.azurecr.io",

"name": "kubeDockerRegistry",

"provisioningState": "Succeeded",

"resourceGroup": "kube-demo-group",

"sku": {

"name": "Basic",

"tier": "Basic"

},

"status": null,

"storageAccount": null,

"tags": {},

"type": "Microsoft.ContainerRegistry/registries"

}At this point, if we knew username and password to our ACR then we would run docker login to login to the registry. As the first line of the response suggests, the admin user is disabled by default.

We are going to use Azure CLI to handle the details of logging in to our container registry through docker.

Z:\>az acr login --name kubeDockerRegistry

Login SucceededWe are going to use aks-helloworld image to test deployments to our kubernetes cluster.

Z:\>docker pull neilpeterson/aks-helloworld:v1

...snip...

Status: Downloaded newer image for neilpeterson/aks-helloworld:v1Docker image naming format is registry[:port]/user/repo[:tag]. When registry part not specified then it is assumed to be hub.docker.com. If we want to push an image to our private container registry then we need to tag the image accordingly. In our case the name should be kubedockerregistry.azurecr.io/aks-helloworld:latest.

Z:\>docker tag neilpeterson/aks-helloworld:v1 kubedockerregistry.azurecr.io/aks-helloworld:latestNow, when we push the image, it will be sent to our private container registry.

Z:\>docker push kubedockerregistry.azurecr.io/aks-helloworld:latest

The push refers to repository [kubedockerregistry.azurecr.io/aks-helloworld]

752a9476c0fe: Pushed

1f6a42f2e735: Pushed

fc5f084dd381: Pushed

...snip..

851f3e348c69: Pushed

e27a10675c56: Pushed

latest: digest: sha256:fb47732ef36b285b1f3fbda69ab8411a430b1dc43823ae33d5992f0295c945f4 size: 6169Associate Azure Container Registry and Kubernetes Cluster

We have set up our kubernetes cluster and a private container registry and be able to communicate with both of them.

Next step is to make them be able to talk with each other.

We need to grant acrpull permission to our kubernetes cluster service principal to be able to pull docker images from our private container registry.

In order to do this, we need two pieces of information.

First, we need to get the server principal id of the cluster which we will be referring to as SERVER_PRINCIPAL_ID.

Z:\>az aks show --resource-group kube-demo-group --name kube-demo --query "servicePrincipalProfile.clientId" --output=tsv

9646e977-98de-4217-beb3-28ec3d043290Secondly, we need resource id of the private container registry which we will be referring to as ACR_RESOURCE_ID.

Z:\>az acr show --name kubeDockerRegistry --resource-group kube-demo-group --query "id" --output=tsv

/subscriptions/<redacted>/resourceGroups/kube-demo-group/providers/Microsoft.ContainerRegistry/registries/kubeDockerRegistryFinally, we are going to grant the acrpull permission to SERVER_PRINCIPLE_ID on ACR_RESOURCE_ID.

Z:\>az role assignment create --role acrpull --assignee <SERVER_PRINCIPAL_ID> --scope <ACR_RESOURCE_ID>

{

"canDelegate": null,

"id": "/subscriptions/<redacted>/resourceGroups/kube-demo-group/providers/Microsoft.ContainerRegistry/registries/kubeDockerRegistry/providers/Microsoft.Authorization/roleAssignments/<redacted>",

"name": "<redacted>",

"principalId": "<redacted>",

"resourceGroup": "kube-demo-group",

"roleDefinitionId": "/subscriptions/<redacted>/providers/Microsoft.Authorization/roleDefinitions/<redacted>",

"scope": "/subscriptions/<redacted>/resourceGroups/kube-demo-group/providers/Microsoft.ContainerRegistry/registries/kubeDockerRegistry",

"type": "Microsoft.Authorization/roleAssignments"

}Install Helm

Helm is the package manager for Kubernetes.

We are going to use it to deploy our applications into our cluster.

Helm has a server-side component called tiller which needs to be initialised in the cluster to be able to create resources needed for deployment of our application.

Let’s do that first.

Z:\>helm init --history-max 200

$HELM_HOME has been configured at Z:\.helm.

Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster.

Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy.

To prevent this, run `helm init` with the --tiller-tls-verify flag.

For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installation

Happy Helming!If we run helm list now, we will get an error message because helm is running with the default service account. That means it doesn’t have the required permissions to make any changes to our cluster.

Z:\>helm list

Error: configmaps is forbidden: User "system:serviceaccount:kube-system:default" cannot list configmaps in the namespace "kube-system"We need to grant the required permissions to be able to install packages into our cluster.

NOTE: This is giving cluster-admin access to the tiller service, which is not something you should be doing in production.

Z:\>kubectl create clusterrolebinding add-on-cluster-admin --clusterrole=cluster-admin --serviceaccount=kube-system:default clusterrolebinding.rbac.authorization.k8s.io/add-on-cluster-admin created

If we run helm list again, the error message should not show and we should be able to see the tiller pod running.

Z:\>kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

heapster-5d6f9b846c-t4n4h 2/2 Running 0 1h

kube-dns-autoscaler-746998ccf6-kc5hp 1/1 Running 0 1h

kube-dns-v20-7c7d7d4c66-n8tnd 4/4 Running 0 1h

kube-dns-v20-7c7d7d4c66-tk6pt 4/4 Running 0 1h

kube-proxy-vblvr 1/1 Running 0 1h

kube-svc-redirect-dz7tp 2/2 Running 0 1h

kubernetes-dashboard-67bdc65878-vwb67 1/1 Running 0 1h

metrics-server-5cbc77f79f-hhxxp 1/1 Running 0 1h

tiller-deploy-f8dd488b7-ls5j4 1/1 Running 0 53m

tunnelfront-66cd6b6875-29vvv 1/1 Running 0 1hDeploy with helm

In Helm’s terminology a recipe for a deployment is called a chart. A chart made of a collection of templates and a file called Values.yaml to provide the template values.

We are going to create a chart for our aks-helloworld application.

Z:\>helm create aks-helloworld-chart

Creating aks-helloworld-chartHelm is going to create a folder with name aks-helloworld-chart. The file that we are interested is values.yaml.

The contents of the file look like this:

# Default values for simple-server.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

replicaCount: 1

image:

repository: nginx

tag: stable

pullPolicy: IfNotPresent

nameOverride: ""

fullnameOverride: ""

service:

type: ClusterIP

port: 80

ingress:

enabled: false

annotations:

{}

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

hosts:

- host: chart-example.local

paths: []

tls: []

# - secretName: chart-example-tls

# hosts:

# - chart-example.local

resources:

{}

# We usually recommend not to specify default resources and to leave this as a conscious

# choice for the user. This also increases chances charts run on environments with little

# resources, such as Minikube. If you do want to specify resources, uncomment the following

# lines, adjust them as necessary, and remove the curly braces after 'resources:'.

# limits:

# cpu: 100m

# memory: 128Mi

# requests:

# cpu: 100m

# memory: 128Mi

nodeSelector: {}

tolerations: []

affinity: {}We are going to change the values under the image block. These values define which and what version of the docker image to pull.

For our application;

image.repositoryiskubedockerregistry.azurecr.io/aks-helloworldimage.tagislatest.

We are not interested in ingress and tls blocks for now, but we are going to use them later.

Once we have updated these values, we can try deploying our application.

Z:\>helm install -n aks-helloworld aks-helloworld-chart

NAME: aks-helloworld

LAST DEPLOYED: Mon Apr 15 14:31:22 2019

NAMESPACE: default

STATUS: DEPLOYED

RESOURCES:

==> v1/Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

aks-helloworld-aks-helloworld-chart 0/1 1 0 1s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

aks-helloworld-aks-helloworld-chart-599d9658f6-4gvjt 0/1 ContainerCreating 0 1s

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

aks-helloworld-aks-helloworld-chart ClusterIP 10.0.242.36 <none> 80/TCP 1s

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=aks-helloworld-chart,app.kubernetes.io/instance=aks-helloworld" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl port-forward $POD_NAME 8080:80The installation has kicked off. You can watch the progress of the deployment by running the following command.

Z:\>kubectl get deployments --watch

NAME READY UP-TO-DATE AVAILABLE AGE

aks-helloworld-aks-helloworld-chart 0/1 1 0 96s

aks-helloworld-aks-helloworld-chart 1/1 1 1 100sAs a result of the deployment of our application, the following resources created in the kubernetes cluster:

- Pods are a group of one or more containers running inside the cluster.

- Services define a well known name and a port for a set of pods inside our cluster. You might be running your application in multiple pods, but then in order to connecto to these pods you need to know IPs and ports assigned to them. Instead of connecting pods individually, you can use services.

- Deployments are used to declare what resources you want to run inside your cluster and kubernetes handles the creating and updating the necessary resources. If a pod dies, it spins up another one. If deployment is deleted then all the associated resources are deleted.

Install nginx ingress

Right now, we have pods and services running within the cluster. But, they are not accesible outside of the cluster.

The resource that allows external requests to map into the services within the cluster is called ingress.

We need to create an ingress resource to tell kubernetes how the requests should be mapped.

Luckily, there is a premade chart that we can just install and enable ingress.

Z:\>helm install stable/nginx-ingress

NAME: kneeling-coral

LAST DEPLOYED: Fri Apr 12 13:29:54 2019

NAMESPACE: default

STATUS: DEPLOYED

RESOURCES:

==> v1/ConfigMap

NAME DATA AGE

kneeling-coral-nginx-ingress-controller 1 2s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

kneeling-coral-nginx-ingress-controller-f66ddfd74-z6cgx 0/1 ContainerCreating 0 1s

kneeling-coral-nginx-ingress-default-backend-845d46bc44-jqzl4 0/1 ContainerCreating 0 1s

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kneeling-coral-nginx-ingress-controller LoadBalancer 10.0.218.217 <pending> 80:30833/TCP,443:31062/TCP 2s

kneeling-coral-nginx-ingress-default-backend ClusterIP 10.0.187.231 <none> 80/TCP 2s

==> v1/ServiceAccount

NAME SECRETS AGE

kneeling-coral-nginx-ingress 1 2s

==> v1beta1/ClusterRole

NAME AGE

kneeling-coral-nginx-ingress 2s

==> v1beta1/ClusterRoleBinding

NAME AGE

kneeling-coral-nginx-ingress 2s

==> v1beta1/Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

kneeling-coral-nginx-ingress-controller 0/1 1 0 2s

kneeling-coral-nginx-ingress-default-backend 0/1 1 0 2s

==> v1beta1/Role

NAME AGE

kneeling-coral-nginx-ingress 2s

==> v1beta1/RoleBinding

NAME AGE

kneeling-coral-nginx-ingress 2s

NOTES:

The nginx-ingress controller has been installed.

It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status by running 'kubectl --namespace default get services -o wide -w kneeling-coral-nginx-ingress-controller'

An example Ingress that makes use of the controller:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: example

namespace: foo

spec:

rules:

- host: www.example.com

http:

paths:

- backend:

serviceName: exampleService

servicePort: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tlsHelm deployed all the ingress related resources. If we query the running services, we should see an ingress-controller with an external IP assigned.

Z:\>kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

aks-helloworld-aks-helloworld-chart ClusterIP 10.0.242.36 <none> 80/TCP 1d

kneeling-coral-nginx-ingress-controller LoadBalancer 10.0.218.217 13.77.160.221 80:30833/TCP,443:31062/TCP 1d

kneeling-coral-nginx-ingress-default-backend ClusterIP 10.0.187.231 <none> 80/TCP 1d

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 1d13.77.160.221 is the IP that we can use to connect to our cluster now.

Let’s see what we get back when we make a request!

Z:\>curl 13.77.160.221

default backend - 404NOTE: You can install curl if you don’t have it locally install by running

scoop install curl

The response is default backend - 404 which is absolutely normal.

It means ingress is up and running but it doesn’t know how to map external requests to any of the internal services, therefore, falling back to the default backend which only returns 404.

We are going to modify ingress block on our chart as follows:

ingress:

enabled: true

annotations:

kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

hosts:

- paths:

- /We have enabled the ingress, which will tell helm to create an ingress resource which maps the root of our host to the internal aks-helloworld service.

It’s worth bumping up the version of the chart in Chart.yaml so that we can rollback if anything goes wrong.

Let’s deploy the new version.

Z:\>helm upgrade aks-helloworld aks-helloworld-chart

Release "aks-helloworld" has been upgraded. Happy Helming!

LAST DEPLOYED: Mon Apr 15 14:51:19 2019

NAMESPACE: default

STATUS: DEPLOYED

RESOURCES:

==> v1/Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

aks-helloworld-aks-helloworld-chart 1/1 1 1 19m

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

aks-helloworld-aks-helloworld-chart-599d9658f6-4gvjt 1/1 Running 0 19m

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

aks-helloworld-aks-helloworld-chart ClusterIP 10.0.242.36 <none> 80/TCP 19m

==> v1beta1/Ingress

NAME HOSTS ADDRESS PORTS AGE

aks-helloworld-aks-helloworld-chart * 80 1s

NOTES:

1. Get the application URL by running these commands:

http:///Let’s test if the server is returning anything!

Z:\>curl -k https://13.77.160.221/

<!DOCTYPE html>

<html xmlns="http://www.w3.org/1999/xhtml">

<head>

<link rel="stylesheet" type="text/css" href="/static/default.css">

<title>Welcome to Azure Container Service (AKS)</title>

<script language="JavaScript">

function send(form){

}

</script>

</head>

<body>

<div id="container">

<form id="form" name="form" action="/"" method="post"><center>

<div id="logo">Welcome to Azure Container Service (AKS)</div>

<div id="space"></div>

<img src="/static/acs.png" als="acs logo">

<div id="form">

</div>

</div>

</body>

</html>Create a DNS Zone

Accessing the cluster only by the IP is not ideal.

I want to get to the cluster by using a domain name. I am going to configure one of my custom domains to access the cluster.

First, we need to create a DNS Zone resource for our domain.

Z:\>az network dns zone create --resource-group=kube-demo-group -n idursun.dev

{

"etag": "00000002-0000-0000-7f3b-15e695f3d401",

"id": "/subscriptions/<redacted>/resourceGroups/kube-demo-group/providers/Microsoft.Network/dnszones/idursun.dev",

"location": "global",

"maxNumberOfRecordSets": 5000,

"name": "idursun.dev",

"nameServers": [

"ns1-07.azure-dns.com.",

"ns2-07.azure-dns.net.",

"ns3-07.azure-dns.org.",

"ns4-07.azure-dns.info."

],

"numberOfRecordSets": 2,

"registrationVirtualNetworks": null,

"resolutionVirtualNetworks": null,

"resourceGroup": "kube-demo-group",

"tags": {},

"type": "Microsoft.Network/dnszones",

"zoneType": "Public"

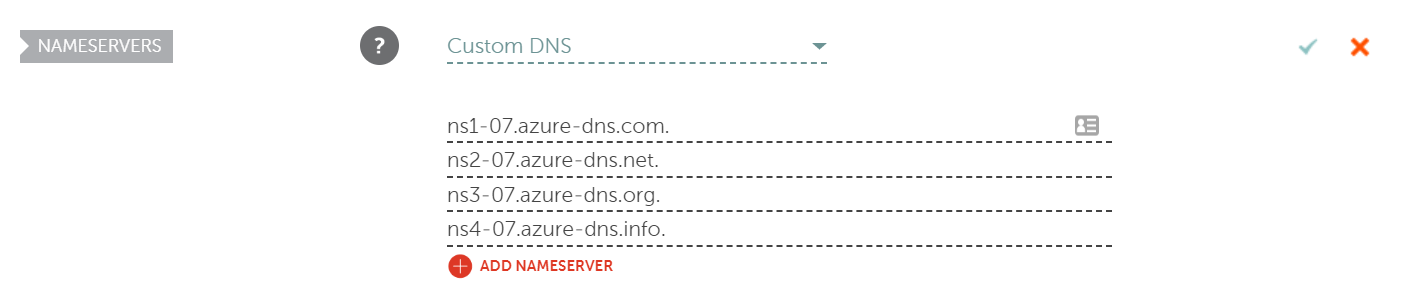

}We are interested in the name server values that are printed as a part of the JSON response. I am going to enter these values to my domain registrar’s portal so that the domain resolves into our DNS Zone.

In my registrar’s portal, it looks like this:

NOTE: Don’t forget to include trailing dots.

DNS propagation may take many hours to complete.

You can run use nslookup to check if the operation is completed. The name of the primary name server should change to ns1-07.azure-dns.com.

Z:\>nslookup -type=SOA idursun.dev

...snip...

idursun.dev

primary name server = ns1-07.azure-dns.com

...snip...When a user types the domain into their browser, they will be taken to the Azure DNS Zonec, but Azure doesn’t know what IP to redirect to. We need to add an A-type record-set in our DNS Zone to point our domain to the cluster’s external IP.

Z:\>az network dns record-set a add-record --resource-group=kube-demo-group -z idursun.dev -n @ -a 13.77.160.221

{

"arecords": [

{

"ipv4Address": "13.77.160.221"

}

],

"etag": "5b0a3609-ab6b-4ca2-bb62-c86e978757f7",

"fqdn": "idursun.dev.",

"id": "/subscriptions/<redacted>/resourceGroups/kube-demo-group/providers/Microsoft.Network/dnszones/idursun.dev/A/@",

"metadata": null,

"name": "@",

"provisioningState": "Succeeded",

"resourceGroup": "kube-demo-group",

"targetResource": {

"id": null

},

"ttl": 3600,

"type": "Microsoft.Network/dnszones/A"

}Let’s update our aks-helloworld-chart by adding our host value.

ingress:

enabled: true

annotations:

kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

hosts:

- host: idursun.dev

paths:

- /I have added a host value to point to our custom domain.

Let’s bump the chart version and deploy again.

Z:\>helm upgrade aks-helloworld aks-helloworld-chart

Release "aks-helloworld" has been upgraded. Happy Helming!

LAST DEPLOYED: Mon Apr 15 16:26:22 2019

NAMESPACE: default

STATUS: DEPLOYED

RESOURCES:

==> v1/Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

aks-helloworld-aks-helloworld-chart 1/1 1 1 115m

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

aks-helloworld-aks-helloworld-chart-599d9658f6-4gvjt 1/1 Running 0 115m

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

aks-helloworld-aks-helloworld-chart ClusterIP 10.0.242.36 <none> 80/TCP 115m

==> v1beta1/Ingress

NAME HOSTS ADDRESS PORTS AGE

aks-helloworld-aks-helloworld-chart idursun.com 80 95m

NOTES:

1. Get the application URL by running these commands:

http://idursun.dev/We should be able to navigate to our cluster by using the domain.

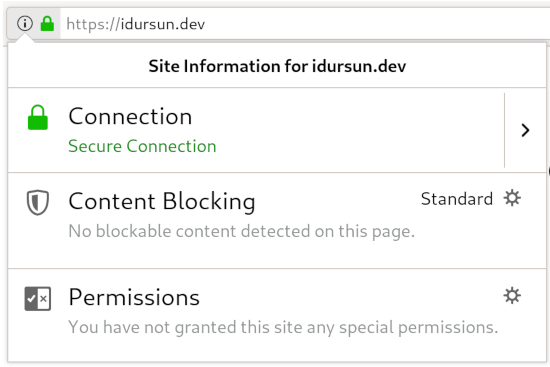

curl -L http://idursun.dev/If you try to reach the website from a browser, you will be redirected to https because of the default HSTS policy. Majority of the browsers will refuse to load the website because it doesn’t have a browser-trusted certificate.

Let’s fix this!

TLS Certificate

I am going to use letsencrypt.org to obtain a TLS certificate.

Let’s Encrypt is a well-known, non-profit certificate authority. Certificates issued by ‘let’s encrypt’ are valid for 3 months and needs to be renewed afterwards. Renewal of the certificate can be done manually or can be automated by running an ACME client.

Luckily, there is an add-on called cert-manager for kubernetes which can automate the whole process for us. We are going to install it next but before we do that there is one more thing we need to do.

We need to add CAA record-set to our DNS zone to make it clear that our certificate issuer is letsencrypt.org. This record is checked as a part of baseline requirements by many CAs as they are required to do to be trusted by major browsers.

Z:\>az network dns record-set caa add-record -g kube-demo-group -z idursun.dev -n @ --flags 0 --tag "issue" --value "letsencrypt.org"

{

"caaRecords": [

{

"flags": 0,

"tag": "issue",

"value": "letsencrypt.org"

}

],

"etag": "26a7e752-aac4-4816-87dd-ee7a8dc3e718",

"fqdn": "idursun.dev.",

"id": "/subscriptions/<redacted>/resourceGroups/kube-demo-group/providers/Microsoft.Network/dnszones/idursun.dev/CAA/@",

"metadata": null,

"name": "@",

"provisioningState": "Succeeded",

"resourceGroup": "kube-demo-group",

"targetResource": {

"id": null

},

"ttl": 3600,

"type": "Microsoft.Network/dnszones/CAA"

}You can use https://caatest.co.uk/ to check if your CAA record is set up correctly.

Install cert-manager

cert-manager is an open source kubernetes add-on by jetstack that automates issuance and renewal of TLS certificates.

I have installed cert-manager:0.7 by following their installation guide.

Z:\>kubectl apply -f https://raw.githubusercontent.com/jetstack/cert-manager/release-0.7/deploy/manifests/00-crds.yaml

customresourcedefinition.apiextensions.k8s.io/certificates.certmanager.k8s.io created

customresourcedefinition.apiextensions.k8s.io/challenges.certmanager.k8s.io created

customresourcedefinition.apiextensions.k8s.io/clusterissuers.certmanager.k8s.io created

customresourcedefinition.apiextensions.k8s.io/issuers.certmanager.k8s.io created

customresourcedefinition.apiextensions.k8s.io/orders.certmanager.k8s.io created

Z:\>kubectl create namespace cert-manager

namespace/cert-manager created

Z:\>kubectl label namespace cert-manager certmanager.k8s.io/disable-validation=true

namespace/cert-manager labeled

Z:\>helm repo add jetstack https://charts.jetstack.io

"jetstack" has been added to your repositories

Z:\>helm repo update

Hang tight while we grab the latest from your chart repositories...

...Skip local chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈ Happy Helming!⎈

Z:\>helm install --name cert-manager --namespace cert-manager --version v0.7.0 jetstack/cert-manager

NAME: cert-manager

LAST DEPLOYED: Mon Apr 15 20:52:37 2019

NAMESPACE: cert-manager

STATUS: DEPLOYED

...snip...Create an issuer

We have cert-manager up and running.

Next thing to do is to create an issuer resource to kick off requesting a TLS certificate from letsencrypt.

Let’s create a file with the following content and name it issuer-prod.yaml.

apiVersion: certmanager.k8s.io/v1alpha1

kind: Issuer

metadata:

name: letsencrypt-prod

spec:

acme:

# You must replace this email address with your own.

# Let's Encrypt will use this to contact you about expiring

# certificates, and issues related to your account.

email: <your email here>

server: https://acme-v02.api.letsencrypt.org/directory

privateKeySecretRef:

# Secret resource used to store the account's private key.

name: letsencrypt-prod

# Enable the HTTP01 challenge mechanism for this Issuer

http01: {}NOTE: Don’t forget to change the email.

Z:\>kubectl apply -f issuer-prod.yaml

issuer.certmanager.k8s.io/letsencrypt-prod createdNow we can enable TLS in our aks-helloworld-chart chart and configure it to use the issuer that we have just created.

ingress:

enabled: true

annotations:

kubernetes.io/ingress.class: nginx

certmanager.k8s.io/issuer: "letsencrypt-prod"

certmanager.k8s.io/acme-challenge-type: http01

hosts:

- host: idursun.dev

paths:

- /

tls:

- secretName: cert-prod

hosts:

- idursun.devWe have added certmanager.k8s.io/issuer annotation to specify which issuer to use and also set certmanager.k8s.io/acme-challenge-type value to http01 to match the challenge type of the issuer.

cert-manager should pick the changes and should handle the communication with letsencrypt and finally create a certificate resource with the name cert-prod.

Let’s bump the chart version and upgrade our chart once more.

Z:\>helm upgrade aks-helloworld aks-helloworld-chartIt might take a while to complete the request but eventually, we should see our certificate created.

Z:\>kubectl get certificate

NAME

cert-prod

Z:\>kubectl describe certificate/cert-prod

..snip...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Generated 51s cert-manager Generated new private key

Normal GenerateSelfSigned 51s cert-manager Generated temporary self signed certificate

Normal OrderCreated 50s cert-manager Created Order resource "cert-prod-1408931963"

Normal OrderComplete 26s cert-manager Order "cert-prod-1408931963" completed successfully

Normal CertIssued 26s cert-manager Certificate issued successfullyLet’s navigate to our domain and check if the HTTPS connection is secure.

Conclusion

Phew!

That was quite a long post even though we have cut corners whenever we can.

I hope this would give an overall understanding of how various pieces of technology come together to create a kubernetes cluster that is capable of routing HTTP requests to the services inside the cluster as well as issuing a TLS certificate and keeping it up to date.

As a next step, you might create an Azure DevOps CI/CD pipeline that deploys your application straight from the git repository to the cluster.

Cheers!